Internet video is everywhere.

That’s probably not a super surprising sentiment coming from somebody who works at Mux, where we’ve got customers working on stuff as varied as streaming concerts live to your phone or helping companies distribute critical updates to their employees in an engaging way. But, for real, it’s everywhere to the point where, when you stop and think about it, you can almost view it as background radiation. Just think of those GIFs-that-are-actually-MP4s when you’re scrolling through Twitter, or the last time you needed a product review--my vice is woodworking tools, and if you say “shouldn’t that be vise” I’m going to glare at you over the internet--and ended up in a YouTube rabbit hole.

This stuff is everywhere. And while here at Mux we’re big on letting you focus on getting great content out to your consumers it can also be edifying to know how we got here, why things are the way they are, and maybe take a stab at what’s next. Which brings me to this week’s definitely-not-biting-off-more-than-I-can-chew topic, as the title probably clued you into: In The Beginning There Was Static. This title is much shorter, though less descriptive, than my working title, A Random Walk Towards and Sometimes Away From Slightly Fewer Macroblocks In Optimal Situations. That wouldn’t fit as a HN title, so it was axed. But anyway, today we’re going to talk about around seventy-five years of history in the video space, from about 1927 to about 2003. Next time we’ll get into what’s happened since--the rise of H.264 to be basically everywhere, H.265 HEVC, Ogg Theora, VP8, all that stuff. But we’ve got some ground to cover to get there.

One quick note before we start ruminating. When I’m writing these kinds of things I have to be acutely aware that there are only so many electrons I can perturb on the topic, and regardless of how many of those I wobble on your screen it probably won’t be enough. So I’ve opted to consider this more conversational than exhaustive and I’ll try to link liberally throughout for additional reading when there’s something I think that a curious reader might find interesting.

Analog broadcasts

For the sake of my sanity, we’re going to elide the first fifty-ish years of moving pictures. That means we’re going to be skipping over film in general (as a reel of film cels delivered by the postal service doesn’t have much of a throughline to H.265 and VP9) and we’re going to be skipping over the genuinely fascinating mechanical television because, as wild as that thing is, it’s ultimately a dead end of technology. So we’re going to pick up in the late 1920s with a guy named Philo Farnsworth and his experiments with the cathode ray tube, or CRT.

It’s worth noting that Philo Farnsworth isn’t the inventor of the cathode ray tube. The earliest iteration of one is from the end of the 19th century. And he’s not the first person to record recognizable electronic video. But the reason why Farnsworth gets a lot more mention than Kenjiro Takayanagi or Vladimir Zworykin is, in large part, that Farnsworth built a thing that shipped, by itself, in its entirety. In 1927 he’d built, and by 1929 he’d largely worked the kinks out of the image dissector camera. Farnsworth’s image dissector camera was built around that cathode ray tube--most folks of a certain age know of a CRT as a thing we’ve used to display images in a television, but until the advent of the CCD image sensor in the 1980s they were used to capture images as well.

By 1934, Farnsworth was demonstrating an end-to-end live broadcast, all the way from a camera to a recognizably electronic television.

Whoa.

And while he was doing that, lawyers at companies you might have heard of even today--companies like RCA and Westinghouse-- were flinging patent lawsuits hither and thither. And that is a rabbit hole for another day, but eventually the Farnsworth Television and Radio Corporation made him a very wealthy man. (Farnsworth went on to make a nuclear fusor. Yeah, as in “nuclear fusion”. Interesting guy.) Roughly contemporaneous with the U.S.-based adventures in electronic video were others in Europe, notably the Emitron and its descendants that were designed for the BBC.

So we've got a camera, and we've got a way to play back the recordings (sort of - it'll get better soon). But what do we do with it? And can we piggyback off this newfangled radio thing?

In writing this, I noticed something interesting about the mental model the folks I polled had for broadcasting. I polled half a dozen folks and asked for a guesstimate of when radio broadcasting really took off generally in the United States, and the median was about 1875. In reality? Try 1920. (I think folks might’ve conflated radio with telephone.) And so when we start to talk about television broadcasts in the late 1930s and early 1940s we’re really only twenty years into this whole thing. On top of that, radios are pretty simple and there’s only two real ways to encode an analog audio signal in a way that simple electronics can handle--making amplitude modulation (AM) and then frequency modulation (FM) radio a pair of obvious standards that took root pretty widely.

Video is, it will shock no one to hear, a little more complex. How do you encode it? Cathode ray tubes work based on “lines”--how do you encode the idea of a line? How many lines make up a picture (a frame)? How fast can those be sent to create an image of the desired quality? Then what happens when you want to add color to the mix--by 1940 this was starting to become a reality in large part thanks to John Logie Baird, the aforementioned mad Scotsman of the mechanical television--while still putting out a signal that a black-and-white television could consume? Unlike radio, this is a set of questions where it’s not that hard to come up with good answers for and reasonable people could pick different parameters for a number of them. And the nice thing about standards, of course, is that there are so many to choose from.

For the sake of brevity we’ll elide a good bit of post-WWII history here where even in the United States you had the Federal Communications Commission trying to work with existing broadcasters and manufacturers to come up with a standard they could all work with. First the FCC endorsed a standard from CBS (yes, that CBS) that provided color but wouldn’t interoperate with existing black-and-white sets; TV manufacturers wouldn’t play ball and the only compatible TVs were ones that CBS themselves manufactured. In response to that boondoggle of an effort the US television industry, through the National Television System Committee (yes, that NTSC), developed a “compatible color” (read: “your black and white TV still works”) standard based on an alternative RCA (yes, that...you get the idea) design. Sixty fields of 262.5 scan lines per second, of which 240 from each field were used as visible lines and they’d be interlaced together. If you do video, these numbers should sound really familiar: this is the 480i standard definition television format that was in use from 1953 to 2015. And to me that’s really cool: I think it’s fascinating to dig up the artifacts that show why technology is the way it is.

Meanwhile, in the U.K., John Logie Baird wanted 1000-line color as a standard, which would’ve been roughly equivalent to the 1080i signals considered Really Heckin’ Nice in the 2000s. And Baird proposed this in 1943, ten years before the 1953 NTSC 480i definition in the United States and well before the Europe rallied around what would become the 576i PAL standard in 1962. (Retro-game enthusiasts, wave your SCARTs in the air, please and thank you.)

Think about it. 1080i in 1943. What-if, right?

Digital video

Analog broadcast signals have an interesting relationship with data bandwidth. To send a given amount of data per second via amplitude modulation (the same AM as AM radio) you need to have a chunk of the radio spectrum wide enough to send it. For NTSC, this is about a 6MHz band. There is a problem here, as those of us old enough to remember the rabbit ears on the television might recall: we only have so many 6MHz bands, and thus channels, we can work with. For those too young: consider Wi-Fi channels and why you’re only supposed to use the 2.4GHz channels 1, 6, and 11 in most environments.

But say it’s the 1970s and you’ve made the jump to the digital realm, and for the sake of slightly anachronistic argument let’s take for granted that you’ve already defeated the MCP and your sequel is a long way away. We’re starting to send video over digital modes of communication--streams of bits per second. And while the analog demands for I/O is not trivial for picking up broadcasts, it’s a strain on more generally constrained resources. Sending lossless, uncompressed video is absolutely not an option, and you can do the math yourself: 480 lines of 640 samples (pixels), 30 times a second, at something like 16-bit color (call it 5 bits for red and blue, 6 bits for green, because of the eye’s greater sensitivity to green), and you’re talking about 147,456,000 bits, or 147.5Mbit/sec.

(Nerd note: “16-bit color” is not the same thing as NTSC color, which is an analog derivation of a couple different out-of-phase signals. Being analog, it doesn’t have to be digitally bucketed and can fluctuate in all kinds of entertaining ways. And even once the signal gets to the TV it’s hard to really quantify what the power curves of any of-the-era TV would have on their electron guns--there’s a reason it’s sometimes called Never The Same Color. It’s also not the in the same reference frame as the “8-bit color” or “10-bit color” of a modern display or camera, which is referring to the number of bits per color channel. Modern “8-bit color” is actually 24 bits per pixel. End of nerd note.)

147.5Mbit/sec is a lot of Mbit over not a lot of sec. But let’s be generous and let’s assume you’re a real sharp cookie at Xerox PARC and it’s 1975 or 1976: you’ve got Ethernet from your Alto to your coworker’s Alto and it’s screaming along at 2.94Mbit/sec. In the same building. And maybe the next building over.

I sense a problem here for our media overlords.

Heck--you couldn’t be guaranteed to be able to send that signal over commercial Ethernet until Gigabit Ethernet became standard in 1998/1999, and in practice not for quite a while longer. And outside of your office? Early 90’s bonded ISDN might get you 128Kbit/sec! (Note the K.) T1 lines, originally designed to trunk 24 simultaneous phone calls, were usually the best you could get to the outside world unless you had All Of The Money, and those nipped along at a positively blazing 1.544Mbit/sec.

Don't even talk to me about your token ring network.

And the lack of digital bandwidth isn’t the only problem, of course. Processing power is at a premium, too. Those of us of a certain age will remember the Sega CD, and those of us of a certain age and a certain familial affluence will remember Myst et al., which promised to unlock the power of full motion video...at a resolution and a framerate where you could count the pixels while they chugged across your screen. But you had CDs! They could hold six hundred and fifty megabytes each! And it was right there! Aaaaand the affordable decoding and the graphics technology of the era couldn’t chew those videos at a decent clip, so out they went, anyway.

There’s only one solution: we have to send, and to chew on, less data. We need to send fewer pixels--which is also a contributing factor to having those tiny videos of yore, because we didn’t have great ways to render big ones even if we could get the data for them--and we need smarter ways to describe the pixels we do have.

Throughout the 1970s you can find paper after paper about video encoding, and most of them are interesting and don’t really work. Uncompressed pulse-code modulation video--which is not that dissimilar from an audio WAV file--wanted up to 140Mbit for 480i content, and, well gets you better than the aforementioned 147.55Mbit, but even if you’re at 40Mbit/sec you’re still at way too many M’s. Even one M is too many! But, in the seventies people who are way smarter than I am came up with the discrete cosine transform (DCT) that we still use today for digital video and audio. This is the big one, and the original inventor, Nasir Ahmed, has a neat little article on how he came up with it that was published in 1991. (I was 4 at the time of that article’s publication. Just saying.)

DCT wasn’t used in the first official standard for digital video, H.120. It was, however, used in the first one anyone could actually use on hardware of the time, H.261. This is also the big one; H.261 is the basis for all international video format standards since. The details of H.261 itself aren’t super important save one in-hindsight clever thing: H.261 actually did not specify how to encode the video, only how to decode it. As such, encoder designers could come up with their own algorithms for encoding, so long as they decoded cleanly. This led to innovation around different encoder technology and, eventually, some of those technologies would be incorporated into later standards.

H.261 is pretty much out of the picture these days, though ffmpeg and friends can decode it just fine. But it begat MPEG-1 (from the Motion Picture Experts Group), aka .mpg files, which is the only one of the familiar “MPEG” codecs to not be an international standard on its own. MPEG-1 begat MPEG-2, aka H.262. MPEG-3 was something of a dead end, intended to support HDTV before it became obvious that MPEG-2 could handle it. MPEG-3 is not, as I managed to get completely wrong in the first draft of the article, the audio format that definitely murdered the music industry in the nineties; that's MPEG-1 Audio Layer 3, except when it's MPEG-2 Audio Layer 3, because names are hard.

Quiet, you.

H.263 introduced MPEG-4, though, and H.264...well, that’s one we all know and love, right? H.264 AVC: Advanced Video Coding.

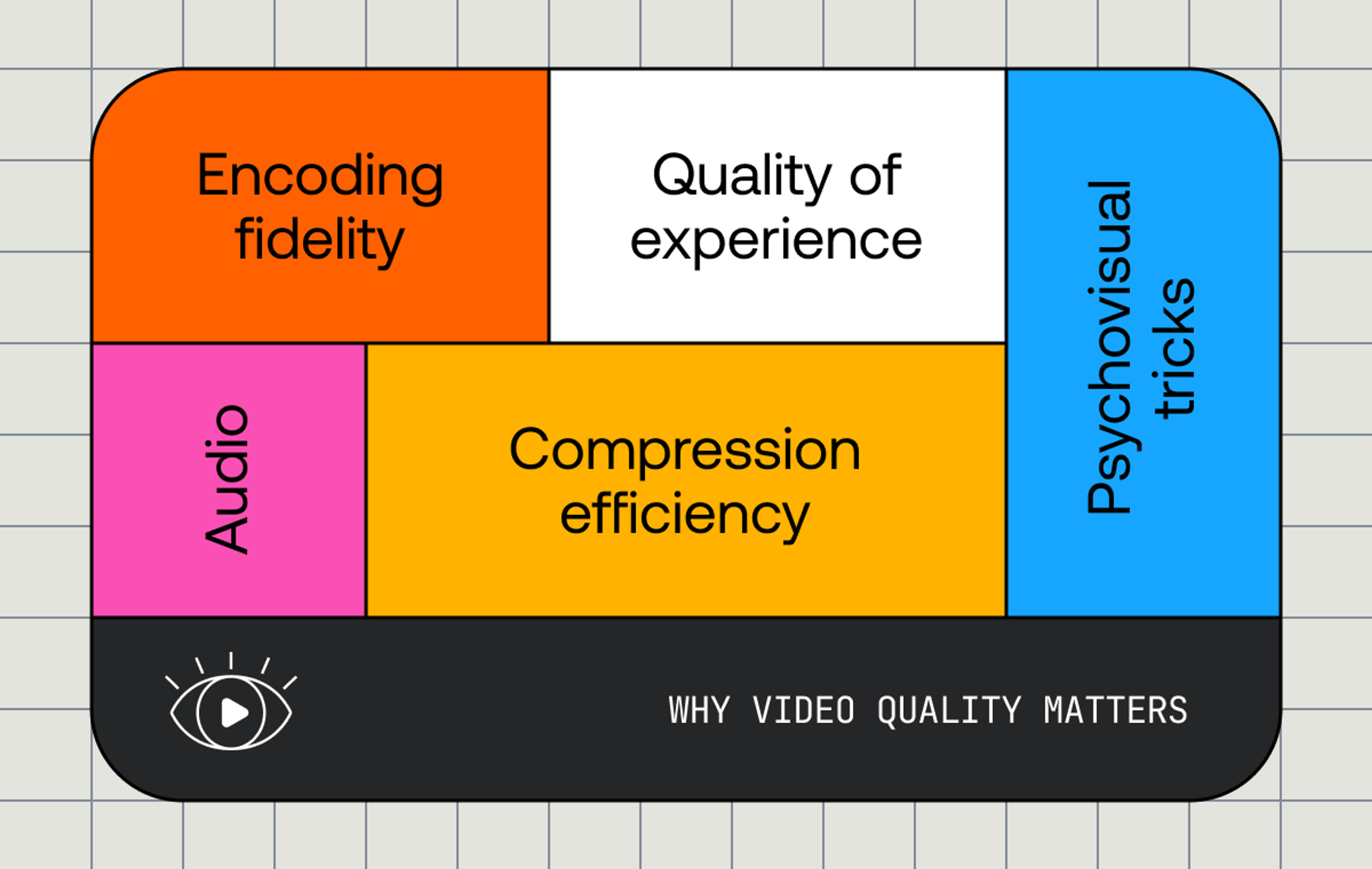

We’ll talk more about H.264 and why it’s so much better later, but here’s a brief blurb for this week: it is a family of standards that, all taken together, aggressively deal with most, but not all, of the “hard parts” of shipping a video to an end user. The H.264 spec is big, hairy, and has all sorts of wild stuff in there; it explains in head-shrinking detail how to use most of the cool stuff learned over the decade-plus prior to its inception and then on top of that it’s got some additions since. High-quality decoding of, comparatively, not a lot of data? It’s got you. The ability to put multiple streams in a single file so you can have, say, stereoscopic video for a VR headset? That was finalized in 2009. These video wizards have been planning this for a while.

And next time...

H.264 is a pretty tremendous work of collaboration that is not, by itself, the totality of why we have stuff like Netflix, why we have a generally interoperable streaming ecosystem and you can use OBS to stream to...I dunno...Mux. But, while today it’s had a seventeen year run at the top of the heap, it’s not as though things have just stopped since.

Next time we’ll talk about H.265 HEVC, the future of H.266 VVC, and the grab bag of other encoding that’s floating around today. And, because this wouldn’t be nearly complete without it, we’ll make a pit stop in HLS and DASH and recount the push to making streaming video actually viable.

Thanks for reading, and I’ll catch you next time.