“What’s your AI strategy” — everyone in San Francisco, right now. Being a founder who’s not on the hype train is embarrassing, so today we’re announcing that we’re no longer Mux: we’re now Mux.ai.

Just kidding (we do have the domain…) But AI really is becoming a critical component of the software landscape and how people build/maintain products. We wouldn’t have been able to release quality, automatic captions for free if it weren’t for OpenAI’s incredible Whisper model, and we think that’s just the beginning.

So, what is our AI strategy here at Mux?

We see AI falling into 3 areas: Mux serving as the video core that interacts with AI tooling, utilizing AI for feature development, and Mux being the last mile provider for companies that are investing in their own AI models. We’re proudly an infrastructure provider, and I’m fairly confident that everyone in our product org would agree that we’ve got plenty of work to do on that front. Building amazing generative models is absurdly hard with deep expertise, and we’re proud to support companies doing that work like Tavus and others. Video models are hard, video infrastructure is hard, so together we make excellent products.

If you’re thinking, “Ok but just selling to a segment is hardly an AI strategy,” you’re right, but the important note here is that we feel strongly that it’s best for us to focus on what we do best, and that’s not creating a new Mux API for text to video.

The feature development side of things is pretty self-explanatory. We’re constantly keeping an eye out for things like Whisper that can allow us to get feature requests into customers’ hands as quickly as possible. That doesn’t even speak to the internal use of tools like Github Copilot, or LLMs for encouraging voice and tone writing guidelines.

That leads to the last one that I’m the most excited about.

The video core that interacts with your AI tooling

When we first launched Video in 2018, it was, frankly, kind of an uphill battle to inspire developers. Do you have videos? Great! You give us those videos, and now they’re streaming! Our image API was (and still is) very cool, and behind the scenes, we’re doing all sorts of cool things to make processing absurdly fast, but if you’re at a hackathon, video-in-video-out requires a pretty specific project.

Fast forward to today, and the product has matured immensely. The feature set is now in a place that, along with your AI toolchain, can create a video platform Megazord.

Recipes

Automatic translation/dubbing

Mux can already produce auto-generated captions as transcripts, but what if you want your audio translated (dubbed) into different languages? Let’s plug in a 3rd party, Sieve in this example, to get this working.

Mux features used:

- Audio-only static renditions

- Multi-track audio

Workflow:

- Create a Mux video with an audio-only static rendition, or add audio-only to an existing one

- Send that audio-only output to Sieve with the languages you want

- Add that resulting audio file back to the video using multi-track audio

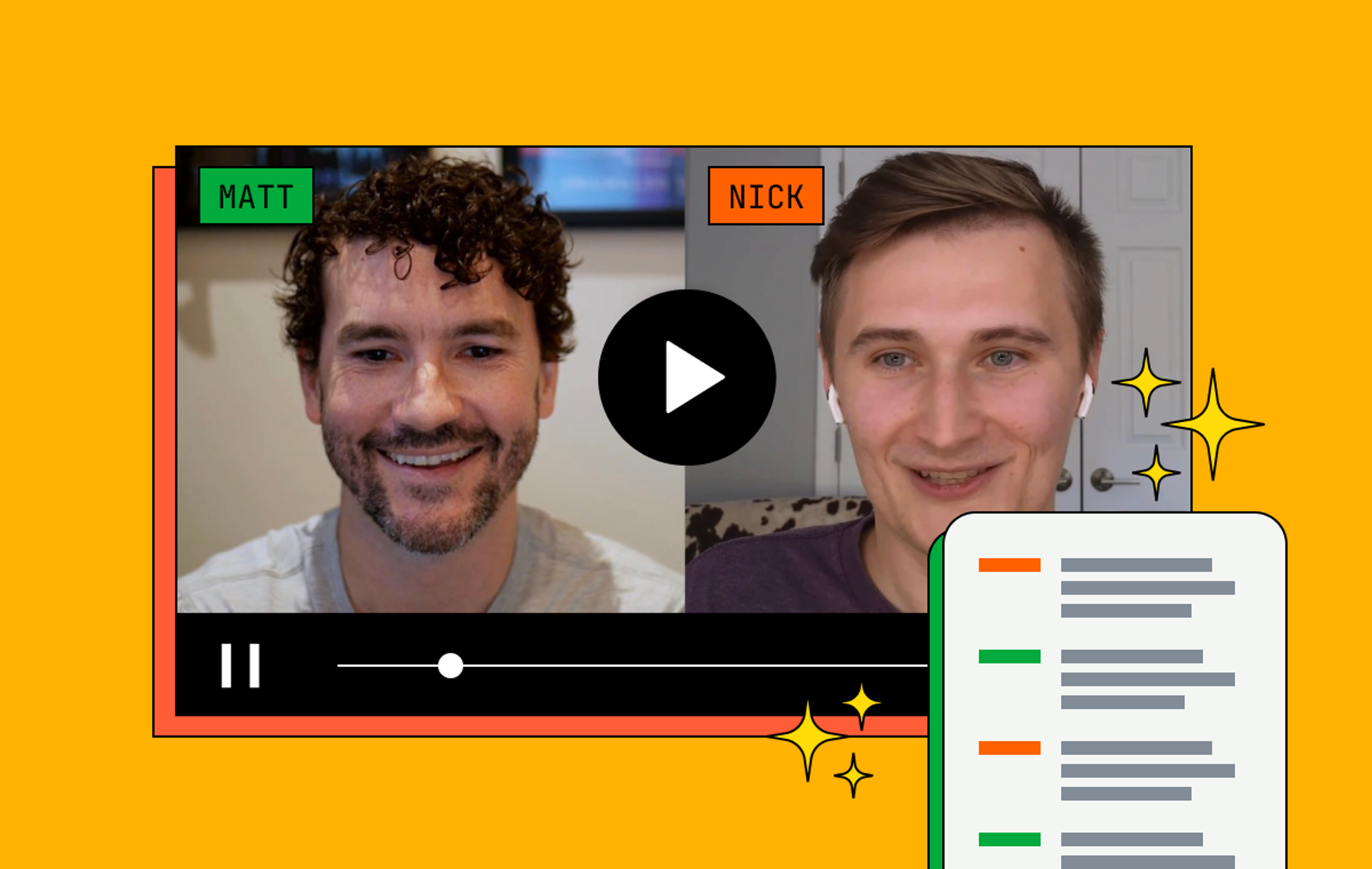

Putting it all together, here’s an example endpoint running on Val.town. You give it an asset ID and a list of languages, and boom, multi-language audio tracks! You can fork it into your own account and dub to your heart’s content. Here’s a video with a smattering of translations added to it:

Chapter segmentation

We wrote a separate post about AI-generated chapters that goes into more detail about how to do this, but here’s the top-down view of how to get it working.

Mux Features used:

Workflow:

- Create a video with auto-generated captions enabled (or use your own captions if you already have them)

- Feed those captions into an LLM for segmenting

- Give that output to Mux Player for a playback experience with sweet chapters

Visual moderation

There are lots of different types of moderation that you may need to perform on your videos. You might need to check for adult content, pirated sports live streams (something we have experience with), or anything else that you need to flag for manual checking. All of these use cases share a similar process — grab images from the video and analyze the content with an AI model to identify what’s in the scene.

Mux features used:

Workflow:

- Upload a video to Mux

- Grab individual frames from the video using our thumbnail URLs

- Process the images using something like Hive or a self-hosted AI model

- If needed, delete the videos that break your rules

Hate speech (or otherwise) moderation

One of those other types of moderation mentioned above is for what the video is actually saying. A visual moderation toolchain will catch things displayed in the video, but what if the video is a person playing with a cute puppy while discussing plans to destroy the world? This time instead of analyzing thumbnails, we’ll check the automagic captions!

Mux features used:

Workflow:

- Create a video with auto-generated captions enabled

- Feed those captions into an LLM with a prompt that asks it to identify what you’re looking for (hate speech, vulgarity, etc)

- If needed, delete the video

- Note: another suggestion is to just remove the playback ID and flag it in your systems for manual review. This way you can add the playback ID back if it’s acceptable.

Summarizing and tagging

There’s a lot of information in video transcripts that can be used to summarize a video or generate a list of subjects that were discussed. You could take this a step further and turn the generated summary into a video title too, automating the whole metadata creation process.

Mux features used:

Workflow:

- Create a video with auto-generated captions enabled

- Give the captions to an LLM with a prompt that asks it to summarize the transcript, you can also be specific about how long or short you’d like the summary to be

- Give the summary back to the LLM and prompt for a title based on it

You could follow the same process to extract tags that represent subjects discussed in the video. If you’re using a service that supports function calling, you could ask for the summary, title, and tags in a single prompt and the LLM will reach out to your different “tools” in order to retrieve all of them in parallel.

Tell us about your AI video use case

We have many more recipes coming soon! Reach out if you have an AI use case you’d like us to tackle and we’ll make a recipe for it.